Running an A/B Test allows you to show up to 5 variations of content or subject lines to users at random and then analyse to determine which variation has the most impact (by measuring open rates or click-through rates). This allows you to determine the type of content, keywords and offers your customers are most interested in.

When creating a new email campaign, you can choose to run an A/B Test on your subject lines or content.

Supported Test Types

We allow you to run two types of tests on your content & subject lines:

- Send Equally

The recipients of your campaign are split into random lists of equal size. Each list receives one of your variations at the same time.

Once the campaign has completed delivery, you can access the reporting to determine which variation had the best engagement. - Test, Then Send The Best

With this type of test, a small subset of your recipients are split into random lists with each being sent a different variation. After a specified amount of time, the platform will determine the variation that performed the best (as specified by you during setup) and the remaining recipients will receive that variation.

A/B Tests are a great way to understand your customers and their interests. After each test, it is important to look at the reports to determine the best subject lines, content and offers that provide you with the highest engagement. This will allow you to run more and more specialised tests and also plan out future offers, campaigns and automations.

Testing Subject Lines

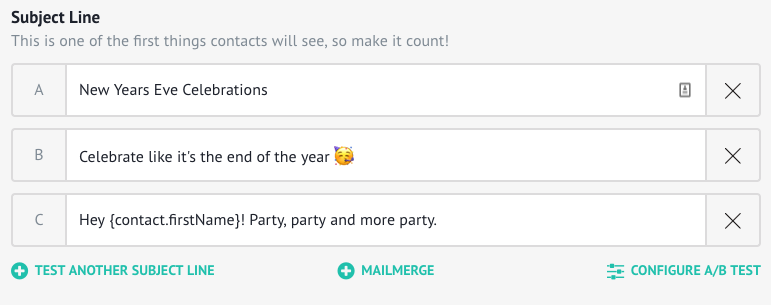

You can test up to 5 different subject lines. When creating your campaign, select "Test Subject Lines" and proceed to the next step.

By default, the platform will prompt for you to provide two subject lines. Clicking "Test Another Subject Line" will allow you to specify additional subject lines for testing.

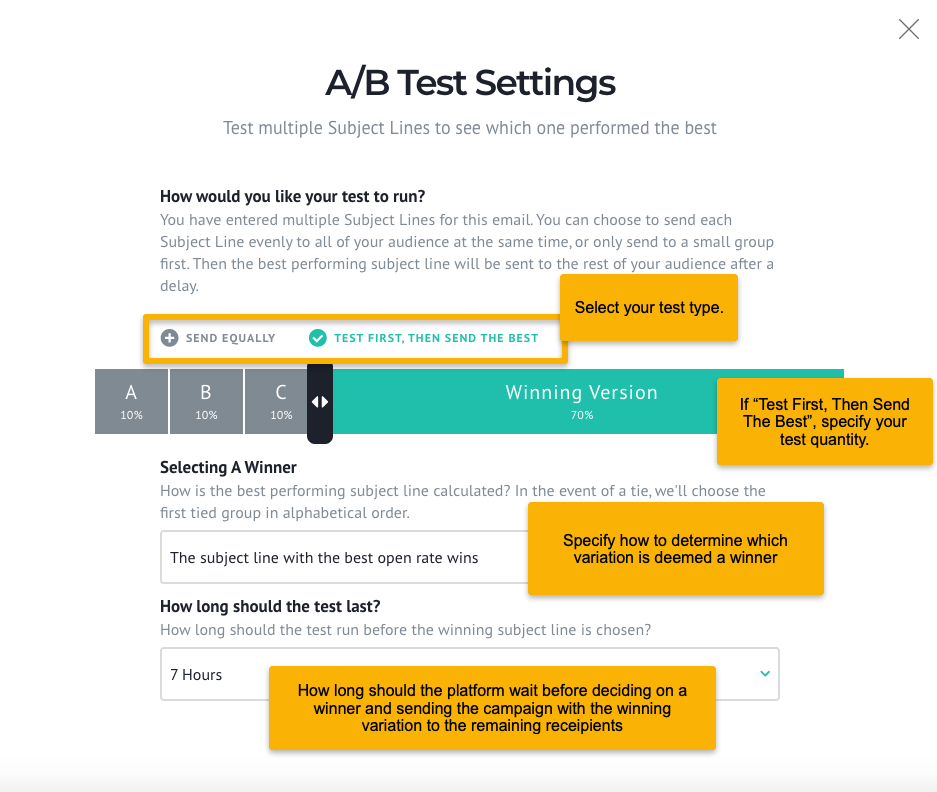

Once all subject lines have been specified, click on "Configure A/B Test" to provide the settings of your A/B Test. By default, a "Send Equally" test will be executed.

Clicking on "Test First, Then Send The Best" will allow you to run a winning variation campaign - as part of this, you can specify what percentage of your total recipient list will receive the campaign instantly (we recommend 20% - 30%), how long should the platform wait before delivery to the remaining recipients and how to determine a variation winner (best open rate OR best click through rate).

If running a "Test First, Then Send The Best" campaign, it is important to remember that there will be a delay in your overall campaign delivery time.

As such, it is important to send your campaign early to ensure that your customers don't receive it too late. For example, a test that begins at 3pm and runs

over a 7 hour period means that the majority of your customers will receive the campaign at 10pm.

over a 7 hour period means that the majority of your customers will receive the campaign at 10pm.

Testing Content

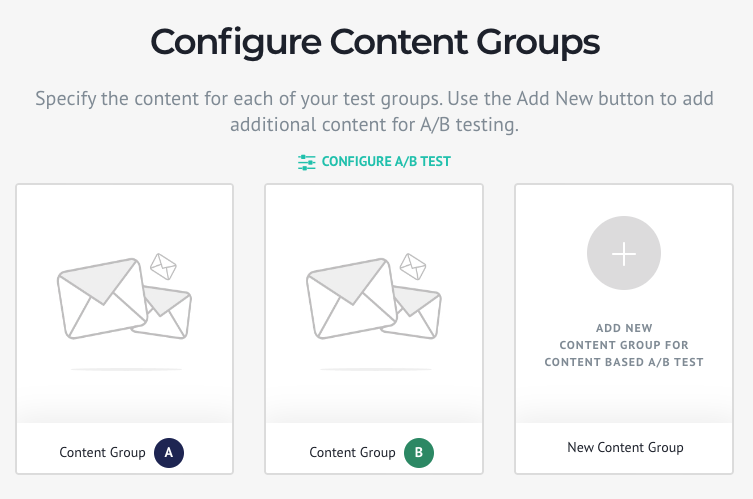

As with subject lines, the platform allows you to specify up to 5 different variations of content to be sent to random users.

After selecting to run an A/B Test on content, the platform will provide you with the ability to specify two content variations. Clicking on "New Content Group" will allow you to specify additional variations.

To specify your content, hover over each of your content groups and click on "Select". You can now specify the content for that group. Content can be specified by selecting from recent emails, using a template or creating your email completely from scratch. After creating and saving your content, you will be taken back to the "Configure Content Groups" page to allow you to specify the content for other groups.

Once all content has been specified, click on "Configure A/B Test" at the top of the page to specify your test settings.

The recipient list can be split into equal groups with each group receiving different content OR you can execute a test by sending your content to a small group and distributing the best performing content to the rest of the list. The process to configure your A/B Test Settings is the same as subject line tests. The campaign report will provide a breakdown of each content group and its individual report along with an aggregate for the whole campaign.

The variations between your content groups should subtle. The overall content (including layout, theme, design, colours etc...) should be the same. We recommend that when testing different content variations, you only test specific items (eg: 2-For-1 Offer vs 50% Discount etc...). Depending on your objective, a good test is also the colour and position of your buttons (ie: do red buttons get more clicks than green buttons? Or does having my call-to-action at the top receive more clicks than at the bottom?

Having too much variation between each group won't help you determine how your customers respond. If you have different offers and different buttons then, it won't be possible to understand if the button or the offer helped with the increase in engagement. As such, you should only test one item at a time to get a much deeper understanding.